import shutil

import tempfile

import urllib.request

with urllib.request.urlopen('http://python.org/') as response:

with tempfile.NamedTemporaryFile(delete=False) as tmp_file:

shutil.copyfileobj(response, tmp_file)

with open(tmp_file.name) as html:

pass

import os

from pathlib import Path

import urllib.request

def get_download_path():

"""Returns the default downloads path for linux or windows"""

if os.name == 'nt':

import winreg

sub_key = r'SOFTWARE\Microsoft\Windows\CurrentVersion\Explorer\Shell Folders'

downloads_guid = '{374DE290-123F-4565-9164-39C4925E467B}'

with winreg.OpenKey(winreg.HKEY_CURRENT_USER, sub_key) as key:

location = winreg.QueryValueEx(key, downloads_guid)[0]

return location

else:

return os.path.join(os.path.expanduser('~'), 'downloads')

# 저장할 csv 주소

list_csv = [

"https://raw.githubusercontent.com/HyunchanMOON/lessons/master/lessons/gasprices.csv",

]

# 다운로드 폴더

# downloads_path = str(pathlib.Path.home() / "Downloads")

downloads_path = get_download_path()

# 폴더가 존재하지 않는다면 폴더 생성

if not os.path.isdir(downloads_path):

os.makedirs(downloads_path)

for x in range(len(list_csv)):

url = list_csv[x]

filename = url.split("/")[-1]

urllib.request.urlretrieve(url, pathlib.PurePath(downloads_path, filename))

requests를 더 많이들 사용하는데,

url RESPONSE 200 이 아닐 때 urllib.request는 ERROR 띄워주는 것이 차이 인 듯

import requests

import urllib.request

from bs4 import BeautifulSoup as bs

url = "https://thekkom.github.io/images/yellow_500px.png"

filename = "logo.png"

# urllib use

urllib.request.urlretrieve(url, filename)

# requests use

req = requests.get(url)

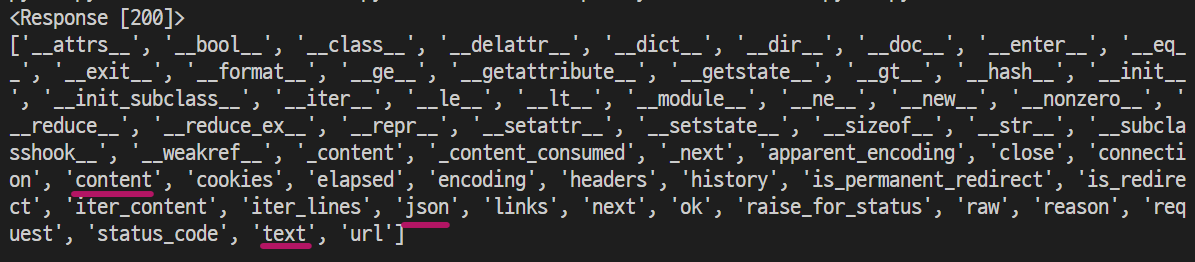

print(req) # Response [200]

print(dir(req))

# req.context / req.html / req.text

with open(filename, 'wb') as f:

f.write(req.content)

f.close()

print("save file")

# for html, use beautifulsoup4

req = requests.get(url)

html = req.text

soup = bs(html, 'html.parser')

참고

https://docs.python.org/3/library/urllib.request.html

urllib.request — Extensible library for opening URLs

Source code: Lib/urllib/request.py The urllib.request module defines functions and classes which help in opening URLs (mostly HTTP) in a complex world — basic and digest authentication, redirection...

docs.python.org

https://docs.python.org/3/howto/urllib2.html#urllib-howto

HOWTO Fetch Internet Resources Using The urllib Package

Author, Michael Foord,. Introduction: Related Articles: You may also find useful the following article on fetching web resources with Python: Basic Authentication A tutorial on Basic Authentication...

docs.python.org

https://moondol-ai.tistory.com/238

파이썬 크롤링 requests vs urllib.request 차이는?

오늘은 파이썬 크롤링을 하면서 궁금해하셨을 request와 urllib.request의 차이에 대해 말해보겠습니다. 일단 필요한 모듈을 불러옵니다. import requests import urllib.request import re from bs4 import BeautifulSoup as

moondol-ai.tistory.com

_